MNIST digits recognition using Keras and CNNs

This is a simple example of creating sequential convolutional neural network model in tensorflow keras and training it to recognize MNIST digits dataset that’s today’s “Hello World” of image recognition.

Import required libraries

from tensorflow.keras import layers

from tensorflow.keras import models

Create Sequential model:

model = models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation='relu', input_shape=(28,28,1)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3,3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3,3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

Print out summary of the model:

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 11, 11, 64) 18496

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 5, 5, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 3, 3, 64) 36928

_________________________________________________________________

flatten (Flatten) (None, 576) 0

_________________________________________________________________

dense (Dense) (None, 64) 36928

_________________________________________________________________

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 93,322

Trainable params: 93,322

Non-trainable params: 0

_________________________________________________________________

Import MNIST dataset and to_categorical function

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

Init train and test data

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

Reshape and normalize data and labels

train_images = train_images.reshape((60000, 28, 28, 1))

train_images = train_images.astype('float32') / 255

test_images = test_images.reshape((10000, 28, 28, 1))

test_images = test_images.astype('float32') / 255

train_labels = to_categorical(train_labels)

test_labels = to_categorical(test_labels)

Train the model

model.compile(optimizer='rmsprop', loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(train_images, train_labels, epochs=5, batch_size=64)

Epoch 1/5

60000/60000 [==============================] - 5s 77us/step - loss: 0.1711 - acc: 0.9471

Epoch 2/5

60000/60000 [==============================] - 4s 59us/step - loss: 0.0488 - acc: 0.9849

Epoch 3/5

60000/60000 [==============================] - 4s 60us/step - loss: 0.0336 - acc: 0.9898

Epoch 4/5

60000/60000 [==============================] - 4s 59us/step - loss: 0.0255 - acc: 0.9924

Epoch 5/5

60000/60000 [==============================] - 4s 59us/step - loss: 0.0196 - acc: 0.9940

<tensorflow.python.keras.callbacks.History at 0x7f07860745f8>

Save trained model

model.save('mnist_cnn.h5')

Load and evaluate the model (test the accuracy on test data)

from tensorflow.keras.models import load_model

model = load_model('mnist_cnn.h5')

test_loss, test_acc = model.evaluate(test_images, test_labels)

10000/10000 [==============================] - 0s 49us/step

Print out the accuracy

test_acc

0.9918

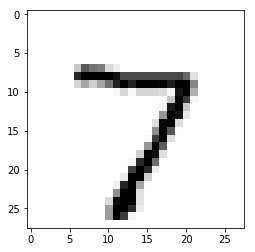

Show example test image:

import matplotlib.pyplot as plt

test_img = test_images[0].reshape(28,28)

plt.imshow(test_img, cmap='Greys')

<matplotlib.image.AxesImage at 0x7f076011e5f8>

Predict example test image using the model

prediction_probs = model.predict(test_img.reshape(1, 28,28,1)).flatten()

prediction_probs.argmax()

7

This experiment was made after reading Deep Learning with Python by Francois Chollet. I strongly recomment this book to everyone interested in python, deep learning, tensorflow and keras.

Source code is available to download at my github repository: